Impressive, But Wrong: The Hidden Risk of LLM-Generated Documentation

The Rise of AI-Generated Docs

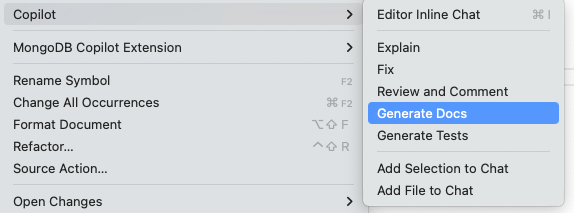

Now that LLMs and GenAI are being integrated into IDEs and other productivity tools used by knowledge workers across every domain, one of the first features people often encounter is documentation generation. For example, in VSCode you can highlight just about any block of code and hit “Generate Docs,” and 99 times out of 100 you'll get something that looks impressive — neatly formatted, grammatically clean, and syntactically correct.

But I can’t help but wonder if this functionality is actually making things better or worse in many cases.

Agile’s Skepticism of Comments

If you’ve spent time in Agile software teams, you know the philosophy: prefer self-documenting code over comments. Clear naming, clean structure, and sound design come first. Comments are a fallback, typically used when something genuinely can’t be made clearer in code alone.

Acknowleding that this is one of the more widely debated Agile philosophies, there are good reasons for this. Source code comments tend to:

Drift out of sync with the code

Mask bad design

Waste time and space when they’re just restating what the code already says

LLMs don’t fix any of that in most current implementations. In fact, they make it easier to generate large volumes of authoritative-looking, ultimately unhelpful commentary. The only real benefit is that they can generate new comments in real time — assuming you don’t trust the old ones. But if you’re in that mode of thinking, aren’t we back to questioning the point of the comments in the first place? Just rely on “Explain” (see above) instead.

LLMs Don’t Fix the Real Problems

However, there are teams and organizations with policies that require comments on every public method and field. These policies predate LLMs and have long been supported by template-based doc generators that produce profound insights like:

/**

* Returns the name of the customer.

*

* @return the customer's name

*/

public String getCustomerName() {

return customerName;

}

Value added, right?

In theory, code reviews should protect us from this kind of nonsense. In practice, most reviewers don’t heavily scrutinize comments — and many are actively impressed by a flood of nicely formatted, authoritative-sounding text. Ironically, that’s exactly what current-generation LLMs are best at producing: Impressive-sounding nonsense.

It’s important to recognize that LLM-generated documentation can improve significantly when the AI has access to richer, more specific context. This might include detailed API schemas, domain-specific glossaries, historical change logs, or even integration with issue trackers and test cases. The better the AI understands the surrounding environment and business rules, the more accurate and relevant its outputs can become. The better tools are currently improving rapidly in this respect.

However, even with improved context, the fundamental challenges remain. Context helps reduce hallucinations and generic outputs, but it doesn’t eliminate the risk of outdated or misleading documentation. Without ongoing human validation and governance, AI-generated docs — context-rich or not — can still drift from reality, especially in fast-moving projects. So while context is a powerful tool to improve quality, it’s no substitute for thoughtful review and stewardship.

Code Review May Not Save You

In most sofware development teams, review processes are optimized for catching logic errors, not misleading documentation (or implementation of incorrect requirements but that’s another issue). Over the years I’ve seen too many pull requests where nobody touches the comments, even when they’re wrong or useless. And now that GenAI can produce thousands of lines of documentation that sound right but check the “comments” box that dynamic gets worse.

The problem isn't just bad information — it’s false confidence. Comments that are vague, outdated, or even hallucinated can derail debugging sessions, mislead new contributors, or even confuse the original author six months down the road. And when code reviewers skim right past them, rot sets in.

What About Everyone Else?

At least software developers have spent enough time with misleading or outdated documentation to approach comments with a healthy dose of skepticism. But what happens when LLM-generated documentation gets pushed out to broader knowledge-worker audiences — people who don't have that instinct from years of getting burned?

That’s when it occurred to me that this might be a bigger problem than we think.

The Business Data Catalog

I was watching a demo of AWS DataZone, where a business data catalog was populated using LLM-generated documentation. Most of the output was impressively verbose — and it struck me as almost entirely useless. But yet it looked so good. My fear is that users will start trusting this content by default — making decisions based on documentation that sounds right but isn’t.

If that’s your data steward, product analyst, or executive basing a decision on this, you’ve got a serious risk on your hands.

What We Can Do About It

So how do we mitigate the risk of bad, incorrect, outdated documentation generated by LLMs? Whether you’re a developer, data steward, analyst, or team lead, here are a few ideas:

Always require human review before publishing AI-generated documentation. In code, that’s your code reviewer. In data, that’s your data steward. Don’t skip this step.

Trigger reviews when the source changes — whether that’s a codebase, schema, API, or product spec. Automate this where possible. For example, you may auto-cut a ticket when the resource underlying some documentation is changed.

Label and timestamp AI-generated content. Show when it was created, and whether it’s been reviewed. This seems obvous, but amazingly enough it’s often being skipped.

Enable feedback loops. Give users a way to flag or comment on incorrect or inadequate documentation right where they’re reading it.

Set expiration policies. Critical documentation should be re-reviewed periodically, even if nothing looks broken.

Remember that the AI is a tool, not a replacement for humans. Especially for C-level executives, it’s critical to understand that AI-generated outputs should augment human expertise—not replace it. Just because something is generated by AI doesn’t mean it’s correct or trustworthy without human oversight.

Let’s Build This the Right Way

If you’re navigating the challenges of adopting AI into your software development or data systems, I can help. I work with engineering and data teams to build practical, trustworthy systems that make smart use of AI without losing sight of code quality, maintainability, or long-term clarity.

Let’s talk about how to avoid AI performative automation theater — and start building tools and teams that stay grounded, even in the age of LLMs.